JC: movement in sensory cortices

24 Sep 2020 | by bartulem

Parker et al. Trends in Neurosci (2020) review the literature on movement-related signals in sensory areas. It has long been thought that regions in the sensory pathway encode progressively more elaborate stimuli features, before relaying that information to downstream cognitive and motor areas which are poised to drive specific behaviors. They note that this feedforward model of sensory processing is likely an over-simplification, as it ignores top-down feedback and context modulation. Recent studies in primary sensory areas have made this issue more prominent, as they have been shown to encode various movement parameters, goal-directed actions and spontaneous body movements. The authors also believe the field has not necessarily benefited by heavily relying on head-fixed preparations, as more ethological paradigms where the animals naturally interact with their sensory environment might help clarify the role of movement signals in sensory computations. Locomotion seems to have a different effect on sensory processing in the visual/auditory systems. In visual cortices, it largely increases responses via changes in gain and spatial integration. On the other hand, locomotion generally decreases sensory responses in the auditory system, a finding linked to the requirement of filtering out self-generated noise.

There are also growing reports of cells encoding movement speed, conjunction of movement and location, and a sensorimotor mismatch of movement and vision. Even in head-fixed mice, during passive stimuli presentation, there was a lot of variability in the responses that could only be accounted for by behavioral variables, like locomotor speed, pupil diameter, and low-dimensional features from the facial expression videos. As rodents investigate their environments, sniffing, whisking and head rotations cause the movement of sensory organs and have clear neural correlates, even in the absence of sensory stimuli. It is clear the motion of sensory organs can influence the statistics of sensory input, such that accurate inferences about what goes on in the world may require a correction mechanism stemming from the aforementioned movements signals. One principle potentially governing the intricate relationship between movement and sensation is the “reafference principle”, stating that self-generated stimulation (i.e. reafference) can be disambiguated from real stimulation (i.e. exafference) with self-generated motor signals, such as efference copy or corollary discharge (e.g. feedback from anterior cingulate or secondary motor cortex into the primary visual cortex can predict the consequences of motor actions on visual flow). Another is “predictive coding”, the idea that the brain constructs a model of the external world such that it can generate predictions of sensory inputs (e.g. movement-related activity can be a consequence of prediction error, due to a lack of expected sensory change).

The authors advocate a field-wide maneuver towards studying motion signals in more naturalistic conditions. Head-fixation and operant task paradigms suffer from the inability to evoke complex stimuli-related movement dynamics, and this could be remedied if the animals were allowed to move freely and extract ethologically relevant stimuli features in their surroundings (e.g. mice pursuing and capturing prey, an activity which requires the coordinated interaction between sensory and motor systems). In this endeavor, advances in the measurement of behavior (e.g. pose estimation tools) are particularly helpful, as they also strongly motivate quantitative models of animals’ actions. Measuring sensory input in a freely moving paradigm is challenging, but camera technology has developed sufficiently to allow for both measuring the subject’s point of view and to recover the stimuli on the retina. The same is increasingly true for other sensory modalities, e.g. whisker protractions and breathing patterns. Measuring large-scale neural activity traditionally required sacrificing behavioral flexibility, but with the advent of imaging and ephys methods suited to unrestrained animals decreased the need for this trade-off. To authors conclude with a set of outstanding questions:

- Will the freely moving paradigm bring into question any results obtained in experiments that utilized head-fixation?

- Are movement-related signals there just to cancel the effects of self-motion or do they do something more?

- What are the specific microcircuits/cell types involved in processing these signals?

- Do motion signals vary across sensory modalities?

- Are the rodent findings generalizable to other species?

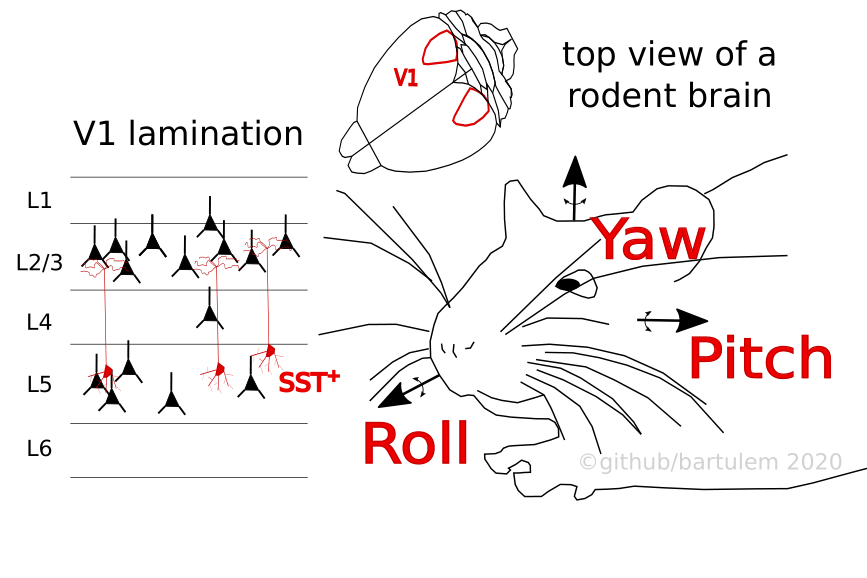

Bouvier et al. Neuron (2020) studied how the primary visual cortex (V1) processes head movements in the context of ambient lighting. Vestibular organs detect angular rotation and linear acceleration, yet no cortical area is solely dedicated to representing these signals. On the contrary, vestibular information is broadcast widely across cortical structures and it is not known how these signals are integrated with other sensory modalities to yield an estimate of whether a given stimulus results from the animal’s movement, rather than the change in the environment. The authors head-fixed awake mice on a servo-controlled table, enabling the rotation of the animals along the horizontal plane (usually 50° rotations with a peak velocity of 80 °/s), while simultaneously using linear probes to do extracellular recordings across all layers of the left V1 in complete darkness. 62% of all recorded neurons (1 502 cells) were modulated either by clockwise (CW) or counter-clockwise (CCW) rotations (at times with differing response profiles in each condition), mimicking the velocity profile with their responses. The vestibular modulation index (vMI; indicates increase or decrease in activity relative to baseline) showed that a bulk of recorded cells (particularly in superficial layers L2/3 and L4) were characterized by a strong suppression of activity, while in deeper layers (L5 and L6) there was a balance between network excitation and suppression. Bilateral lesions of the vestibular organ completely abolished this effect.

Repeating the experiment in light (nb: the mice eyelids were stitched not to contaminate responses with visual stimuli) confirmed prior findings how light itself has a suppressive effect on the baseline activity in superficial layers, but a facilitating impact in deeper layers. Importantly, head movements in light resulted in an overall effect of excitation, and perhaps even more interestingly, the larger the light-mediated suppression of baseline activity, the larger the rotation-mediated excitation effect (a result that stood even after eye movements were abolished). A mechanism that could account for luminance-dependent modulation could entail a differing net effect of a specific inhibitory neuron class, a class excited by head movements in the dark, yet inactive during the light condition. In V1, regular spiking cells (RS) are mainly targeted by two types of fast spiking (FS) neurons: parvalbumin (PV) and somatostatin (SOM) cells. In accordance with prior research, a fraction of FS cells (putative PV cells) were excited by head movements in the dark, but largely had the same effect in the light, making them an unsuitable candidate. The attention turned to SOM cells, which were opto-tagged with Channelrhodopsin 2 in SOM-Cre mice. Superficial SOM cells exhibited the same pattern of modulation as other cell types, but SOM cells in deeper layers displayed the opposite trend: they were excited by head movements in the dark, but suppressed in light.

The unilateral ablation of SOM cells in V1 predominantly affected RS cells in the superficial layers and resulted in a reduction of both the movement-mediated activity suppression in the dark and the motion-mediated facilitation in light. Conversely, selective ablations of PV and serotonergic 5HT3aR inhibitory cells had little effect on the responses of RS cells to head movements. Finally, the authors report results of an experiment in freely moving mice, where they recorded single-cell activity and rotational velocities with head-mounted inertial measurement units (IMUs; but were primarily interested in the horizontal component of head movements). Much like in the head-fixed condition, the average V1 activity was suppressed by head movements in the dark, and facilitated in the light (with the accompanying layer-dependent specificities). These findings jointly suggest a mechanism whereby the luminance-dependent ensemble rate differences rely on SOM cells: in the dark, SOM neurons suppress RS cells in response to head movements, but release their suppression in light, which in turn enables the facilitation of RS cell activity.

Guitchounts et al. Neuron (2020) continuously recorded L2/3 activity of V1 in freely moving rats with interleaved 2h light and dark sessions, as the animals spontaneously navigated around their home cages. Each rat was head mounted with an IMU to capture motion in all three planes. The analyses were focused on head-orienting movements (HOMs; velocity threshold of 100 °/s) along individual directions (yaw, roll and pitch) and bouts of overall movement (L2 norm of linear acceleration components). Regardless of ambient lighting, both single unit (RS and FS) and multiunit activity (MUA) was higher during overall movement, compared to rest. More specifically, HOMs (~8% of the overall movement time) were associated with a suppression of firing rates in the dark, but with an increase of firing rates in the light, the responses deviating from baseline usually nearly a second before movement onset. Training a decoder with session- and tetrode-averaged MUA or single-unit responses in the 500 ms around peak velocity yielded good predictions of the HOM direction (which plateaued 100 ms after peak velocity).

When comparing responses of single neurons to opposing HOM directions, it transpired that large fractions of individual units responded differently to contrary movement directions (e.g. high rate during right, but low rate during left turns). In addition, the ensemble largely responded differently to opposing ambient lighting during the same HOMs (e.g. high rate during right turns in light, but low rate in dark), to the point where the cells’ preferred directions in one lighting condition were not correlated to those in the other. This could suggest the existence of two separate populations: one tuned to direction in the absence, and one tuned to direction in the presence of visual cues. The authors proceeded to study HOM tuning in the context of visual stimulation, by flashing LEDs in the animals’ cages (500 ms on, 400-600 ms off) as they behaved freely. Relative to resting epochs’ responses to flashes, MUA firing rates were higher for overall movement, but lower during HOMs (a finding echoed in the single unit data as well), suggesting the visual system may decrease the gain of visual responses during orienting. Finally, bilaterally lesioning the secondary motor cortex (M2; which is reciprocally connected to V1) brought about a diminishment of HOM-related responses, where the suppression of activity in dark was more attenuated than the facilitation during light epochs.

Guitchounts et al. bioRxiv (2020) utilized the same dataset with freely moving rats to quantify the extent of head direction (HD) modulation in V1, measuring all three directional components (yaw, pitch and roll) with head-mounted IMUs. A linear decoder that took MUA as input accurately predicted all three components well beyond chance (although performance was higher for roll and pitch), a result that was invariant to the ambient luminance. Interestingly, in M2 lesioned animals the performance of the decoder was only degraded for the pitch variable, relative to non-lesioned rats, suggesting that the HD tuning was largely M2 independent. The tuning of individual neurons showed preference for any particular direction along any of the three axes, with 20-25% cells exhibiting conjunctive preference for angles in all three planes (even though the tuning widths were substantially wider than those recorded in e.g. anterior thalamus). The exploration of tuning stability (over a seven day period) demonstrated that for both light and dark, and all orienting directions, the signal was quite stable. This extended to tuning peak position as there was little discrepancy between lighting conditions (drift was highest for yaw, consistent with other findings), suggesting the absence of visual cues does little to perturb directional preference.

This set of findings offers novel insights into the complex nature of sensorimotor relationships, but also charts territory for further explorations. A bulk of that future focus might be occupied with investigations of how sensory processing (studying different modalities would be beneficial) is affected by the emergent motion signals. It is yet to be shown whether the net suppression effect in darkness serves as a veto in unreliable dim circumstances, or if light aids in integrating multisensory signals, such that rates have to be elevated. Subsequent work which would include eye tracking and visual stimulation, thereby helping estimate visual flow, could shed light on what these responses are useful for. If the animal moves its head CW, and the brain has to determine whether the stimulation is endogenous or exogenous, it might be beneficial if the CW-motion cells facilitated the CW-flow cells. Since both CW- and CCW-flow cells would be active (because the real visual flow during the turn is CCW), their net effect would be zero, simplifying the decision. Another interesting point of consideration involves studying the upstream sources of the motion signal, to characterize it more precisely. Is this widespread signal purely vestibular by nature, or is it more complex, involving proprioceptive input features and motor efference copy (as suggested by the M2 lesioning results)?